Solving Power Capacity Challenges with Software Defined Power

This article introduces Intelligent Control of Energy solution for complete power management of data centers and similar network and IT infrastructures.

Running out of power is a constant concern for the operators of data centers and similar IT and communications infrastructure. The fight for footprint optimization while boosting processing and storage capabilities is a never-ending battle. However, the inefficiencies and underutilization of current power supply infrastructures that are designed to meet peak demand can now be avoided using a combination of hardware and software to even out supply loading and optimize the available capacity.

This intelligent use of available power can be realized with software tools that profile usage and recognize priority tasks. Utility power can then be supplemented with battery storage to supply peak demand using power stored during low utilization periods. Similarly, low-priority workloads can be assigned to server racks that are only powered when there is sufficient supply capacity. In this way the system can respond to peak demand while managing other tasks to spread the power load.

In much the same way that Software-Defined Data Centers allow self-serviced users to deploy services and workloads in seconds, this approach to Software Defined Power® unlocks the underutilized power capacity available within existing systems. This allows a data center’s server processing and storage capacity to expand without increasing power supply capacity and achieves considerable capital expenditure savings by not overprovisioning power. Furthermore, the use of battery storage to provide peak shaving and load leveling can also enable UPS functionality within a data center or server rack to protect it from power outage.

Understanding the data center ‘power challenge’

The demand for Cloud data services continues apace as businesses and individual consumers become ever more reliant on remotely stored data that can be accessed over the Internet from almost anywhere. In addition, Cisco has estimated that the emergence of the Internet of Things will result in some 50 billion “things” connected to the Internet by 2020 as a myriad of sensors and controls enable smart homes, offices, factories, etc. Combined with more established applications, this is forecast to require a daily network capacity in excess of a zettabyte (1021 bytes) as early as 2018.

Servicing this demand and scaling up the capacity of networks and data centers is inevitably challenging, especially as customer requirements can turn on rapidly. While the IT industry faces pressure to scale data centers, one of the most constrained resources is power. It is often the case that the power capacity of existing data centers is exhausted well before they run out of storage or processing capacity. The two main factors of this power capacity limitation have been the need to provide supply redundancy and the way power is partitioned within data centers, both of which take up significant space but more importantly leaving untapped power sources idle. And this is despite the fact that current server designs are far more power efficient than previous generations and have significantly lower idle power consumption.

Providing additional power capacity within a data center is also time consuming and expensive even assuming that the local utility can supply the additional load, which IDC forecasts could double from 48GW in 2015 to 96GW by 2021 for a typical data center. From a capital expenditure standpoint, as shown in figure 1, the power and cooling infrastructure cost of a data center is second only to the cost of its servers.

Figure 1: Data center monthly-amortized costs (source: James Hamilton’s blog)

The nature of Cloud services also means that demand can fluctuate dramatically with a significant difference between the peak and average power consumed by a server rack. Consequently, providing enough power to meet peak-load requirements will clearly result in underutilization of the installed power capacity at other times. Also, lightly loaded power supplies will always be less efficient than those operating under full-load conditions. Clearly any measure that can even out power loading and free up surplus supply capacity has to be welcome in enabling data center operators to service additional customer demand without having to install extra power capacity.

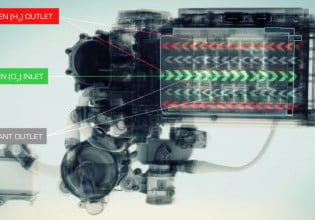

With regard to efficiency considerations, servers and server racks use distributed power architectures where the conversion of power from ac to dc is undertaken at various levels. For example, a rack may be powered by a front-end ac-dc supply that provides an initial 48 Vdc power rail. Then, at the individual server or board level, an intermediate bus converter (IBC) would typically drop this down to 12 Vdc leaving the final conversion, to the lower voltages required by CPUs and other devices, to the actual point-of-load (POL). This distribution of power at higher voltages helps efficiency by minimizing down-conversion losses and also avoiding the resistive power losses in cables and circuit board traces, which are proportional to current and distance.

The more recent migration to digitally controllable power supplies has allowed the introduction of Software Defined Power® techniques that can monitor and control the loading of all the power supplies. This allows intermediate and final load voltages to be varied so that the various supply stages can always operate as efficiently as possible. Nevertheless, further improvements in hardware performance are reaching their limits and other solutions are needed.

The problem with existing data center power provisioning

Traditional data center power supply architectures are designed to provide high availability using supply redundancy to cope with mission critical processing workloads. This is illustrated by figure 2, which shows a 2N configuration that provides the 100% redundancy requirements expected of a tier 3 or tier 4 data center. As can be seen, for a dual-corded server this provides independent power routing from separate utility supplies or backup generators with the additional protection of intermediate redundant uninterruptable power supplies. Even single-corded servers have the security of a backup generator and uninterruptible power supplies (UPS).

However, implicit in this approach is the usually false assumption that all the servers are handling mission critical tasks and that the loading on each (and hence the power demand) is equal. In reality up to 30% of the servers could be handling development or test workloads meaning that half the power provisioned for them is not really required i.e. 15% of the total data center power capacity is blocked from use elsewhere.

Figure 2. A 2N 100% redundancy power architecture for a tier 3/4 data center

The other issue is that, conventionally, supply capacity is designed to provide sufficient power for peak CPU utilization. The variability in server power consumption that this results in can be simply modeled by the following linear equation:

`P_("server")=P_("idle")+u(P_("full")- P_("idle"))`

where Pidle is the server power consumed when idle and u is the CPU utilization.

With new technology delivering lower idle consumption the difference between idle and full power becomes ever more significant. This spread becomes larger still at the rack level, making power capacity planning based on an assumed CPU utilization figure very challenging. Furthermore, the type of workload exacerbates the variability in power consumption. For example, Google found that the ratio between average power and observed peak power for servers handling web mail was 89.9% while web search activity resulted in a much lower ratio of 72.7%. So provisioning data center power capacity based on the web search ratio could result in underutilization by up to 17%.

Unfortunately, it does not end there. The fear is that actual peaks might exceed those that have been modeled, potentially overloading the supply system and causing power outages. This leads planners to add additional capacity to provide a safety buffer. Consequently it is not surprising to find that the average utilization in data centers worldwide is less than 40% purely by taking account of peak demand modeling plus the additional buffering - this figure drops further when redundancy provisions are also included.

Unlocking underutilized power supply capacity

The peak versus average-power consumption issue discussed above clearly locks up considerable power capacity. Where peaks occur at predictable times and have a relatively long duration, data centers typically use local power generating facilities to supplement their utility supply, akin to how power utilities ramp up and down their generating capacity throughout the day to meet expected demand from consumers and business.

Unfortunately, the use of generating sets does not address the problem of peaks arising from more dynamic CPU utilization that is characterized by a higher peak to average power ratio, which is of shorter duration and occurs with a higher frequency. For this the solution is to provide battery power storage. The principle here is simple, the batteries supply power when demand peaks and are recharged during periods of lower utilization. This approach, referred to as peak shaving, is illustrated by figure 3, which shows how a server rack that would normally require 16kW of power can operate with 8-10kW of utility power. Indeed, if utility power is constrained, the power step from 8kW to 10kW could be taken care of with locally generated power, holding the utility supply to a constant 8kW.

Figure 3. By profiling power demand and employing battery storage it is possible to manage peak demand using power stored during low utilization periods

Optimization through dynamic redundancy

The false assumption, mentioned earlier, that all servers in tier 3/4 data centers are handling mission critical workloads can be mitigated by assigning non-critical tasks to specific low-priority server racks. This allows additional server capacity to be installed in the data center up to a limit defined by the maximum non-critical load. So, for example, in a full data center where the maximum server rack load of 400kW for all racks nominally requires dual 400kW supplies to provide 100% redundancy, it could be possible to provide additional low-priority server racks to service perhaps 100kW of non-critical workload. Then in the event that one of the 400kW supplies fails, power is cut to the low-priority server racks to ensure that the mission-critical racks receive full power from the alternate 400kW supply.

Using intelligent load management in this way can free up redundant supply capacity, which has no value-add, to provide a significant increase in a data center’s workload capacity - in this instance adding 25% without the need for provisioning more power. Once again, a combined software and hardware solution can provide this dynamic management of power, monitoring and detecting a supply disruption and immediately switching the alternative supply to ensure continued operation of the mission-critical server racks.

The Intelligent Control of Energy (ICE®) solution

CUI has partnered with Virtual Power Systems to introduce the concept of peak shaving in a novel Software Defined Power® solution for IT systems. The Intelligent Control of Energy (ICE®) system uses a combination of hardware and software to maximize capacity utilization and optimize performance. The hardware comprises various modules, including rack-mount battery storage and switching units, which can be placed at the various power control points in the data center to support software decisions on power sourcing. The ICE software consists of an operating system that collects telemetry data from ICE and other infrastructure hardware to enable real-time control using power optimization algorithms.

Figure 4. CUI’s rack-mount ICE hardware for intelligent power switching and battery storage

To illustrate the system’s benefits, figure 5 highlights an ICE system trial at a top-tier data center. The trial has shown the potential to unlock 16MW of power from an installed capacity of 80MW. Furthermore, the capital expenditure in adding ICE is not only a quarter of the cost that would have been incurred in installing an additional 16MW of supply capacity but the time taken was a fraction and the ongoing operating expenditure is reduced.

Figure 5. The value proposition from installing ICE to unlock unused power capacity

Conclusion

Expanding the capacity of data centers to address the increasing demand for Cloud computing and data storage can often be constrained by available power. Sometimes this can even be a limitation of the utility supply in a particular location but, even if it isn’t, the ability to add more server racks may be restricted by the existing power and cooling infrastructure. Provisioning additional power capacity is costly, second only in cost to adding servers, so any means to improve the utilization of existing power sources has to be welcome.

Through its Intelligent Control of Energy (ICE®) solution, Virtual Power Systems, partnered with CUI, provides a complete power management capability for data centers and similar network and IT infrastructure applications. It maximizes capacity utilization through peak shaving and releases redundant capacity from systems that aren’t totally mission-critical. Importantly its power switching and Li-ion battery storage modules can be readily deployed in both existing and new data center installations with a dramatic reduction in total cost of ownership, up to 50%.

About the Author

Mark Adams has over 25 years of industry experience and has been instrumental in reorganizing CUI's sales structure and moving the company into advanced power products. Before joining CUI in 2009 Mark was a Sales Director at Zilker Labs for the 3 years leading up to their acquisition by Intersil, during which time Mark secured numerous design wins with some of the largest communication OEMs in the world. Prior to that Mark’s sales experience includes 7 years working in distribution with Future Electronics and a further 7 years as a manufacturer representative primarily supporting Xilinx FPGAs. Mark attended Central Washington University where he studied Business Marketing, received his commission from Army ROTC and served in the Army National Guard for 13 years. As Senior Vice President at CUI, Mark is involved in business development and strategic customer engagement.