Powering AI: Navitas Plans for More GaN, SiC Data Center Tech

Navitas revealed an artificial intelligence data center technology roadmap with three times the power to support exponential growth in AI power demand.

Today, the rapid advancement and integration of AI technology worldwide have presented the industry with an unprecedented power dilemma. As AI algorithms grow in complexity and deployment scales, so does the strain on energy resources. While many solutions are needed to improve the situation, from a power electronics perspective, this paradigm necessitates efficient power solutions.

Navitas has developed a roadmap to accommodate the anticipated exponential surge in AI power demands over the next 12-18 months. But how can power electronics help the issue? And how is Naviatas hoping to provide a solution?

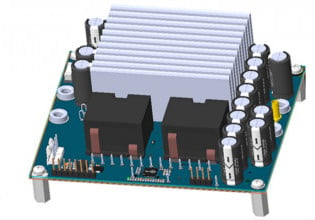

Nativas’ 4.5 kW platform for AI data centers. Image used courtesy of Navitas

The Energy Challenges of AI

ChatGPT's public launch less than a year ago sparked a surge in AI investments, reshaping the digital world. Consequently, this AI boom is driving massive growth in data centers, consuming billions of dollars and energy resources. Projections suggest a doubling or tripling of energy consumption by 2030, with data centers expected to reach 35 gigawatts annually.

In most respects, the largest energy consumer associated with AI is the training of AI models. Data centers now deploy AI servers featuring multiple GPUs, each consuming roughly 400 watts, totaling 2 kilowatts per server. Cutting-edge AI processors such as NVIDIA’s Grace Hopper H100 currently require 700 W each, while upcoming Blackwell B100 and B200 chips are projected to escalate to 1,000 W or beyond by the following year. These figures necessitate innovating AI power technology for a sustainable digital world.

NVIDIA’s H100 computing platform. Image used courtesy of NVIDIA

From a power electronics perspective, the goal is to design efficient and reliable underlying power systems to prioritize computing and training rather than power conversion, necessitating advanced wide bandgap semiconductor materials like silicon carbide (SiC) and gallium nitride (GaN), which are more efficient and robust than traditional silicon. Ultimately, the more efficient and reliable the power systems underlying AI computing are, the more efficient the entire data center can become.

Navitas Leading the Charge in AI Data Center Power

Navitas has released its AI data center technology master plan for the near future.

At a high level, the roadmap aims to triple power capacity to meet the anticipated threefold rise in AI power needs over the next 12-18 months. To enable this, the company will rely heavily on its GaN technology, which they say can potentially reduce over 33 gigatons of carbon dioxide by 2050. In this vein, Navitas is engineering server power platforms escalating from 3 kW to 10 kW.

Video used courtesy of Navitas

In August 2023, the company introduced a 3.2 kW GaN-based data center power platform that achieved a power density of over 100 W and an efficiency of over 96.5%. Later, it launched a 4.5 kW platform combining GaN and SiC, pushing densities over 130 W/in3 and efficiencies over 97%. These advancements have spurred significant market interest, and over 20 data center customer projects are underway, expected to generate substantial revenues.

Now, Navitas plans to introduce an 8-10 kW power platform by late 2024 to meet AI power requirements for 2025.

Sealing the Data Center Power Deal

With the full platform launch expected in the final quarter of 2024, Navitas shows commitment to pushing power density and efficiency boundaries for AI-driven technologies. With greater efficiencies and power densities, the company hopes to pave the way for a future for the growing demands of AI and the data center of tomorrow.